When you think about how a neural network can beat a Go champion or otherwise accomplish tasks that would be impractical for most computers, it’s tempting to attribute the success to math. Surely it’s those algorithms that help them solve certain problems so quickly, right? Not so fast.

Researchers from Harvard and MIT have determined that the nature of physics gives neural networks their edge.

When you write down the laws of physics mathematically, you can describe all of them using functions with basic properties. As such, a neural network doesn’t need to understand every possible function (like a conventional computer would) to generate an answer, it just needs to know some fundamentals.

The network can use each of its layers to approximate each step toward the solution, reaching a conclusion faster than a conventional computer when the solution involves a hierarchical structure (such as when you’re mapping cosmic radiation).

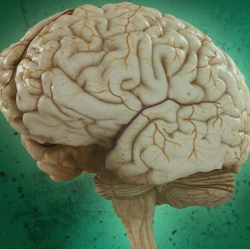

The insights could lead to better-designed artificial intelligence systems that do a better job of exploiting their inherent advantage. Moreover, it could help you understand your own mind. Many neural networks are patterned after the human brain, which suggests that brains are ideally structured for understanding the world around them.