Even as toddlers we’re good at inferences. Take a two-year-old that first learns to recognize a dog and a cat at home, then a horse and a sheep in a petting zoo. The kid will then also be able to tell apart a dog and a sheep, even if he can’t yet articulate their differences.

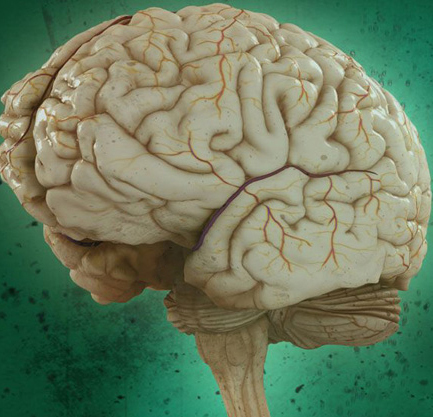

This ability comes so naturally to us it belies the complexity of the brain’s data-crunching processes under the hood. To make the logical leap, the child first needs to remember distinctions between his family pets.

When confronted with new categories—farm animals—his neural circuits call upon those past remembrances, and seamlessly incorporate those memories with new learnings to update his mental model of the world.

It’s perhaps not surprising that even state-of-the-art machine learning algorithms struggle with this type of continuous learning. Part of the reason is how these algorithms are set up and trained.

An artificial neural network learns by adjusting synaptic weights—how strongly one artificial neuron connects to another—which in turn leads to a sort of “memory” of its learnings that’s embedded into the weights. Because retraining the neural network on another task disrupts those weights, the AI is essentially forced to “forget” its previous knowledge as a prerequisite to learn something new. Imagine gluing together a bridge made out of toothpicks, only having to rip apart the glue to build a skyscraper with the same material. The hardware is the same, but the memory of the bridge is now lost.

This Achilles’ heel is so detrimental it’s dubbed “catastrophic forgetting.” An algorithm that isn’t capable of retaining its previous memories is severely kneecapped in its ability to infer or generalize. It’s hardly what we consider intelligent.

But here’s the thing: if the human brain can do it, nature has already figured out a solution. Why not try it on AI?

A recent study by researchers at the University of Massachusetts Amherst and the Baylor College of Medicine did just that. Drawing inspiration from the mechanics of human memory, the team turbo-charged their algorithm with a powerful capability called “memory replay”—a sort of “rehearsal” of experiences in the brain that cements new learnings into long-lived memories.

What came as a surprise to the authors wasn’t that adding replay to an algorithm boosted its ability to retain its previous trainings. Rather, it was that replay didn’t require exact memories to be stored and revisited. A bastardized version of the memory, generated by the network itself based on past experiences, was sufficient to give the algorithm a hefty memory boost.