Dr. Stuart Armstrong is a James Martin Research Fellow at the Future of Humanity Institute, University of Oxford. His Oxford D.Phil was in parabolic geometry. He later transitioned into computational biochemistry for virtual screening of medicinal compounds. His current work at the Future of Humanity Institute centers on Artificial Intelligence (AI) and in particular the potential risks of AI and assessing expert knowledge in AI predictions.

In this interview for PlanetTechNews, Stuart covers the aspects of AI which could be most relevant for the future of humanity; the topic is often of great interest for anyone following science news and technology news.

Q. What is Artificial General Intelligence (AGI) as compared with the more basic AI systems that exist in the world today?

A. AGI is what used to be called simply AI – the desire to create a computerised object that had the same skills and abilities as a human being. In the early days of AI, there was a lot of hope that such an object could be built, and that it could then perform at human level in chess or car-driving.

But many decades of research ended up with little progress towards such an AI, but a lot of progress on narrower problems – such as chess, for instance. So the term AI began to be applied to more narrow forms of computer intelligence, and the term AGI was created to clearly signal that this was the old form of AI, an intelligence that had general human skills, not simply narrow ones in a particular domain.

What is interesting is that we’re not seeing any real sign of AGI, but we are getting narrow AI performance – like "Watson’s" triumph on Jeopardy – that previous generations had assumed would be impossible without full AI. So we’re in something of a paradoxical situations: it’s getting harder and harder to find clear examples of skills that only humans can do, but simultaneously we’re not seeing any signs of a true general intelligence.

Q. What are you areas of expertise and how did you become involved?

A. My original area of expertise was mathematics and medical research, but I’ve branched out since then, developing expertise in decision theory, anthropic probability, human biases and assessing expert competences. It’s these last two that are the most relevant to predicting the future of AGI. Many prominent and less prominent experts have made predictions for AGI, and it’s these predictions that provide our best estimate for the arrival date of AGI and what form it will take. Unfortunately, we should expect these predictions to not be very good (true expertise develops best with rapid feedback, and there can be no such feedback for AGI), and this is born out in practice: expert predictions are all over the place, falling into no reliable pattern, and based on very weak reasoning.

As for getting involved with the future of humanity institute… it’s an institute doing scientific and philosophical work about the far future and the most important issues facing humanity. How could I not get involved?

Q. What are your plans for the future?

A. Analysis of hard-to-predict risks, analysis of systemic failures in prediction, some more work on AGI safety, and other fun things.

Q. Why is it so difficult to create a human-like AI that can learn a variety of tasks on its own without needing to be reprogrammed?

A. Because we have extraordinarily poor introspection. Humans are the only truly general intelligences we know about, but we don’t know much of anything about how we think and what processes go on in our brains. We initially thought that everything that was hard for humans (like chess and formal logic) would be hard for computers, while everything easy (like shape recognition and moving in 3 dimensions) would be easy. It wasn’t a stupid idea at the time, but in fact, the reverse has turned out to be true (that’s the so-called "Moravec’s paradox").

Human skills (especially social skills) have been shaped by millions of years of evolution, and it’s proved very hard to code that into a computer, because we’ve evolved to use these skills, not to understand them. In fact, analysis and understanding of ourselves are using very recently evolved abilities, which we don’t have to a very high level (see how hard learning abstract math is for most humans, versus learning how to gossip – but on any "objective" scale, gossip is much harder than math).

So we’re essentially very good at doing some things, and very bad at understanding how we do them, so we’re unable to code those into computers, since coding normally requires a near-perfect understanding of what to do.

Q. Would it be technically possible to create an AGI based on hardware that exists today?

A. Probably, but we can’t say for sure. What’s lacking isn’t hardware, but ideas. We don’t have a current design for AGI that would work with huge hardware advances. Maybe "big data" and sheer computer power could solve AGI, but that doesn’t appear likely.

Q. Is fully understanding and reverse engineering the human brain critical to being able to build an AGI system?

A. It’d say that’s a sufficient condition, not a necessary one. Certainly, with that full understanding, we could likely build an AGI. But we might be able to build an AGI without copying the human brain at all. AGIs might be very alien minds indeed, completely different from anything human (all aliens in movies are essentially humans with some traits changed – an AGI could be much weirder than that). For instance AIXI is a very simple design for a superintelligence (better than any other intelligence imaginable, in a certain sense) – and its a mathematical formula, not a mind (there are various reasons why AIXI could never be built, though – it’s illustrative only).

Q. What do you think of the idea of using genetic algorithms within a virtual world to create an AGI, using natural selection to create AIs just as natural selection created biological intelligence within the real world?

A. There was a lot of excitement around that idea when it first came out, the usual AI hype, and then it faded. Genetic algorithms certainly have their place, but they’ve had a lot of years now to come up with AGI, and they’ve failed. Don’t rule them out – but don’t rule anything out at this point.

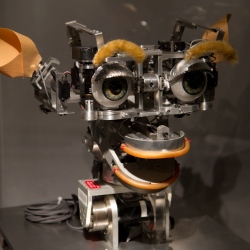

Q. How important is robotics when creating an AGI?

A. It could be very important, but it’s more likely to be narrow AI than AGI.

Q. Which company or project would you say is in the best position to make major breakthroughs in AI, who should we be watching?

A. Google or the US military, because they both have a lot of money and are willing to try out weird things. But neither is particularly likely (neither have any good plans or ideas for building an AGI) – they’re just slightly less unlikely than other projects.

Q. What do you think will be the sate of the AI field as a whole across the next 10 years? What breakthroughs can we expect? What about beyond?

A. Narrow AI will continue to progress. AGI is much harder to predict – it could happen in 10 years, but that seems unlikely. We’re in 1790, and we’re asking how long it is till the airplane – there’s just so much we need to discover before we can start to consider a reasonable timeline.

Q. Are quantum computers such as the machines by Dwave necessary to create AGI? How helpful are they/will they be?

A. Very unlikely to be necessary; classical computers can do anything a quantum computer can do, just slower. They may be helpful, if certain AGI problems are of the right sort to work on a quantum computer. Quantum computers aren’t better than classical computers on a lot of problems – just on some.

Q. What are the biggest risks associated with AI?

A. The problem is that an AGI with human-level skills would become – through copying itself and learning many skills simultaneously, through running much faster, though having the skills to integrate all the narrow AI tools we’ve already created – super-intelligent within a very short time. And that doesn’t even count the effects a super-intelligence could have on trying to boost its intelligence further.

Thus the AGIs are likely to be extremely intelligent, and therefore extremely powerful. We don’t have any known ways of controlling such machines (ideas of containing it in a box, or having human controllers overview its decisions, fail once it develops the ability to manipulate these humans). So we have to specify, in advance, what we would want such an AGI to do. The problem is that AGIs are likely to find permissible anything we don’t explicitly tell them is not permissible, and they’d be able to think their ways around any restrictions that weren’t perfectly phrased.

It’s very hard to code things that feel "obvious" to humans (see previous point about Moravec’s paradox) in a computer, and here we essentially have to solve all of human ethics and philosophy (moderately impossible) and specify them in sufficient details that they can be coded into a computer (very impossible). There are some workarounds these difficulties, but they all seem to suffer from major flaws – they’re better than nothing, but we should only try them if we’re really desperate.

Q. On a related topic, you mentioned that intelligent life and Fermi paradox interest you. Could you briefly outline the Fermi paradox?

A. Intelligent life can evolve: we are the proof of it. It does not seem hard, on the cosmic scale to expand across the galaxy (we could probably do it quite efficiently within a thousand years, if we survive as a technological civilization). Expanding to other galaxies is comparably easy as well. There are many, many planets across the known universe where life could have evolved. And yet we see no sign of it.

Q. What would you suggest is the most likely explanation, according to insights from your studies?

A. My intuitive explanation is that life is much harder to get going than we think – it’s probably the central nervous system that is very tricky to get.

To find out more about Stuart’s work, please see the following:

Predicting AI: intelligence.org/files/PredictingAI.pdf

AI as an existential risk: http://www.youtube.com/watch?v=3jSMe0owGMs

Colonising the universe: http://www.youtube.com/watch?v=zQTfuI-9jIo