Researchers have created an algorithm that allowed a man to grasp objects with a prosthetic hand, powered by his thoughts. The technique, demonstrated with a 56-year-old man whose hand had been amputated, uses non-invasive brain monitoring, capturing brain activity to determine what parts of the brain are involved in grasping an object.

With that information, researchers created a computer program, or brain-machine interface (BMI), that harnessed the subject’s intentions and allowed him to successfully grasp objects, including a water bottle and a credit card. The subject grasped the selected objects 80 percent of the time using a high-tech bionic hand fitted to the amputee’s stump.

Previous studies involving either surgically implanted electrodes or myoelectric control, which relies upon electrical signals from muscles in the arm, have shown similar success rates, according to the researchers. But the non-invasive method offers several advantages, says Jose Luis Contreras-Vidal, a neuroscientist and engineer at UH.

It avoids the risks of surgically implanting electrodes by measuring brain activity via scalp electroencephalogram, or EEG. And myoelectric systems aren’t an option for all people, because they require that neural activity from muscles relevant to hand grasping remain intact.

The work, funded by the National Science Foundation, demonstrates for the first time EEG-based BMI control of a multi-fingered prosthetic hand for grasping by an amputee. It also could lead to the development of better prosthetics, Contreras-Vidal said.

Using non-invasive EEG also offers a new understanding of the neuroscience of grasping and will be applicable to rehabilitation for other types of injuries, including stroke and spinal cord injury, the researchers said.

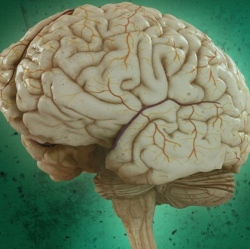

T he study subjects were tested using a 64-channel active EEG, with electrodes attached to the scalp to capture brain activity. Brain activity was recorded in multiple areas, including the motor cortex and areas known to be used in action observation and decision-making, and occurred between 50 milliseconds and 90 milliseconds before the hand began to grasp.

That provided evidence that the brain predicted the movement, rather than reflecting it, he said.