The most recent expectations from last year’s supercomputing events, of 100 petaflops in 2015 and 1 exaflop in 2018, seems to be too pessimistic, after all. What’s enabling the sudden push?

Improvements in processors from Intel, AMD and Nvidia indicate that a 1U or blade HPC server will have 7 TeraFLOPs of peak performance in 2014. 15,000 nodes would be needed for 100 PetaFLOPs. The next generation of chips for 2017 would then be able to reach exaFLOP performance.

There is an upcoming massive jump in CPU FP (floating point) peak performance, and maturing of the add-on accelerators, are what should enable us to see the world’s first 100 PFLOP supercomputers some two years from now, and a further 10 times speedup not much more than two years after that.

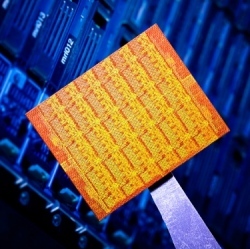

On the CPU front, from Haswell generation onwards, all Intel CPUs will incorporate fused multiply add (FMA) instructions, allowing for up to 16 double precision on 32 single precision FP ops per core per cycle. On a, say, 10 core Haswell-EP Xeon (or similar Core iXX desktop variant) at 3.2 GHz, that would give you 500 GFLOPs in double precision, or whole round 1 TFLOPs in single precision FP peak performance. Haswell EP processors should be available by early 2014.

AMD CPUs already have FMA implemented and, hopefully with the Steamroller core performance improvements in 2013 onwards, there would be similar performing CPUs from them in the 2014 timeframe as well.

Then we add the accelerators: AMD HD 7970 Radeons (Graphics Core Next) already touch 1 TFLOPs DP FP and nearly 4 TFLOPs SP FP performance peak. The upcoming GK110 from Nvidia, as well as Sea Islands from AMD top bins, should exceed 1.5 TFLOPs DP FP well before Christmas comes around. Add to that Intel MIC, whose performance is in the similar ballpark. All these cards will have humongous local memory, anywhere from 6 to 16 GB per card, to keep the processing locally without having to hop onto the slow PCIe bus too often. Each of these architectures may have its own strong point: Nvidia with CUDA, AMD with OpenCL, and Intel with X86 inline programming capability as if it’s a simple co-processor to the CPU. And, their future successors are all expected to be close to 3 TFLOPs DP FP per chip peak performance by mid 2014.

So, if we assume we got a simple 1U or blade HPC server node with 2 of these CPUs and 2 accelerators, plus memory and high speed interconnect, we would have 1 TFLOPs of DP FP CPU performance, plus 6 DP FP TFLOPs or accelerator resource, in a single node – a total of 7 TFLOPs theoretical peak. Therefore, for 100 PFLOPs, we’d need some 15,000 nodes. At up to 80 nodes per double-sided normal format 19-inch rackmount enclosure, plus some space for admin nodes, switches and such, not to mention power distribution and cooling, 200 racks can keep the compute engines of a 100 PFLOPs system running in mid 2014. That’s a manageable size for a data center facility these days, although storage, UPS and other requirements will add to that space, of course.